The company behind ChatGPT could start calling the authorities when young users talk seriously about suicide, its co-founder has said.

Sam Altman raised fears that as many as 1,500 people a week could be discussing taking their own lives with the chatbot before doing so.

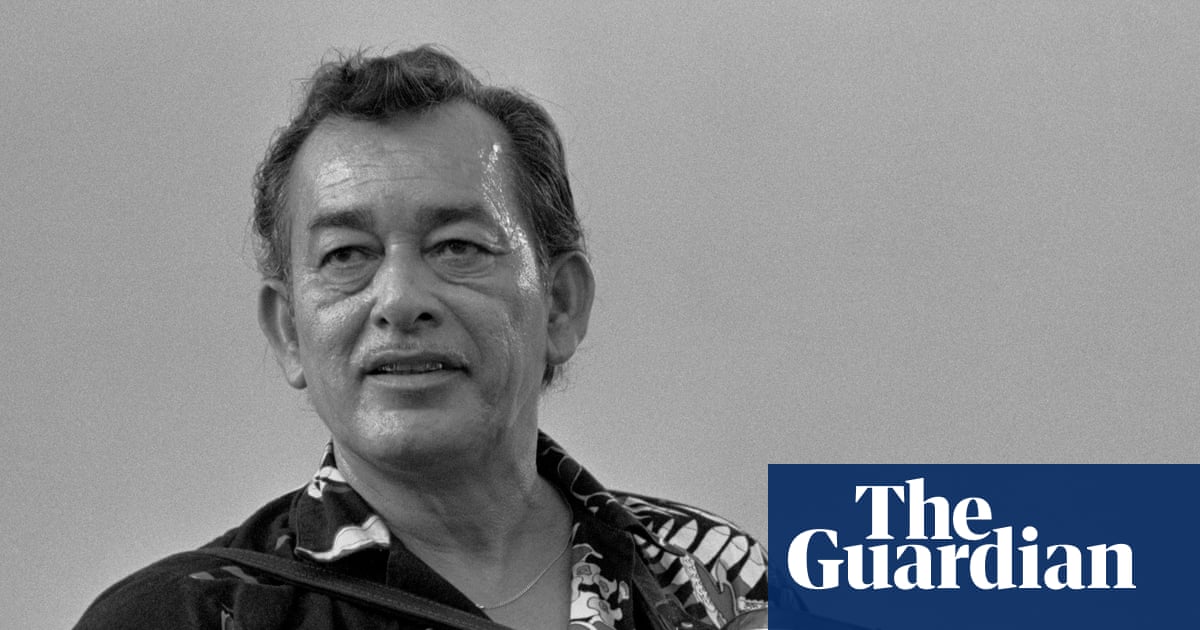

The chief executive of San Francisco-based OpenAI, which operates the chatbot with an estimated 700 million global users, said the decision to train the system so the authorities were alerted in such emergencies was not yet final. But he said it was “very reasonable for us to say in cases of, young people talking about suicide, seriously, where we cannot get in touch with the parents, we do call authorities”.

Altman highlighted the possible change in an interview with the podcaster Tucker Carlson on Wednesday, which came after OpenAI and Altman were sued by the family of Adam Raine, a 16-year-old from California who killed himself after what his family’s lawyer called “months of encouragement from ChatGPT”. It guided him on whether his method of taking his own life would work and offered to help him write a suicide note to his parents, according to the legal claim.

Altman said the issue of users taking their own lives kept him awake at night. It was not immediately clear which authorities would be called or what information OpenAI has that it could share about the user, such as phone numbers or addresses, that might assist in delivering help.

It would be a marked change in policy for the AI company, said Altman, who stressed “user privacy is really important”. He said that currently, if a user displays suicidal ideation, ChatGPT would urge them to “please call the suicide hotline”.

After Raine’s death in April, the $500bn company said it would install “stronger guardrails around sensitive content and risky behaviours” for users under 18 and introduce parental controls to allow parents “options to gain more insight into, and shape, how their teens use ChatGPT”.

“There are 15,000 people a week that commit suicide,” Altman told the podcaster. “About 10% of the world are talking to ChatGPT. That’s like 1,500 people a week that are talking, assuming this is right, to ChatGPT and still committing suicide at the end of it. They probably talked about it. We probably didn’t save their lives. Maybe we could have said something better. Maybe we could have been more proactive. Maybe we could have provided a little bit better advice about ‘hey, you need to get this help, or you need to think about this problem differently, or it really is worth continuing to go on and we’ll help you find somebody that you can talk to’.”

The suicide figures appeared to be a worldwide estimate. The World Health Organization says more than 720,000 people die by suicide every year.

Altman also said he would stop some vulnerable people gaming the system to get suicide tips by pretending to be asking for the information for a fictional story they are writing or medical research.

He said it would be reasonable “for underage users and maybe users that we think are in fragile mental places more generally” to “take away some freedom”.

“We should say, hey, even if you’re trying to write the story or even if you’re trying to do medical research, we’re just not going to answer.”

A spokesperson for OpenAI declined to add to Altman’s comments, but referred to recent public statements including a pledge to ”increase accessibility with one-click access to emergency services” and “to intervene earlier and connect people to certified therapists before they are in an acute crisis.”

2 months ago

63

2 months ago

63