Far-right extremists are using livestream gaming platforms to target and radicalise teenage players, a report has warned.

The new research, published in the journal Frontiers in Psychology, reveals how a range of extremist groups and individuals use platforms that allow users to chat and livestream while playing video games to recruit and radicalise vulnerable users, mainly young males.

UK crime and counter-terror agencies have urged parents to be especially alert to online offenders targeting youngsters during the summer holidays.

In an unprecedented move, last week Counter Terrorism Policing, MI5 and the National Crime Agency issued a joint warning to parents and carers that online offenders “will exploit the school holidays to engage in criminal acts with young people when they know less support is readily available”.

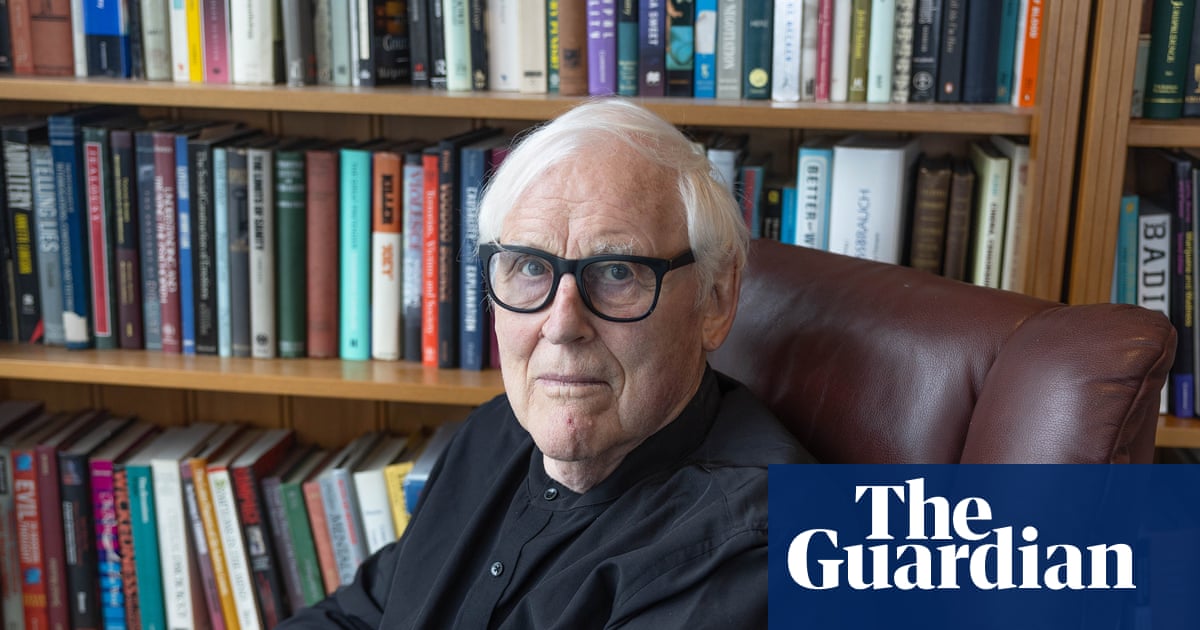

Dr William Allchorn, a senior research fellow at Anglia Ruskin University’s international policing and public protection research institute, who carried out the study with his colleague Dr Elisa Orofino, said “gaming-adjacent” platforms were being used as “digital playgrounds” for extremist activity.

Allchorn found teenage players were being deliberately “funnelled” by extremists from mainstream social media platforms to these sites, where “the nature and quantity of the content makes these platforms very hard to police”.

The most common ideology being pushed by extremist users was far right, with content celebrating extreme violence and school shootings also shared.

On Tuesday, Felix Winter, who threatened to carry out a mass shooting at his Edinburgh school, was jailed for six years after the court heard the 18-year-old had been “radicalised” online, spending more than 1,000 hours in contact with a pro-Nazi Discord group.

Allchorn said: “There has definitely been a more coordinated effort by far-right groups like Patriotic Alternative to recruit young people through gaming events that first emerged during lockdown. But since then a lot of extremist groups have been deplatformed by mainstream spaces, so individuals will now lurk on public groups or channels on Facebook or Discord, for example, and use this as a way of identifying someone who might be sympathetic to reach out to.”

He added that, while some younger users turn to extreme content for its shock value among their peers, this can make them vulnerable to being targeted.

Extremists have been forced to become more sophisticated as the majority of platforms have banned them, Allchorn said. “Speaking to local community safety teams, they told us that approaches are now about trying to create a rapport rather than making a direct ideological sell.”

The study also spoke to moderators, who described their frustration at inconsistent enforcement policies on their platforms and the burden of deciding whether content or users should be reported to law enforcement agencies.

While in-game chat is unmoderated, moderators said they were still overwhelmed by the volume and complexity of harmful content, including the use of hidden symbols to circumvent banned words that would be picked up by automated moderation tools, for example, a string of symbols stitched together to represent a swastika.

Allchorn highlighted the need for critical digital literacy for parents as well as law enforcement so they could better understand how these platforms and subcultures operate.

Last October Ken McCallum, the head of MI5, revealed that “13% of all those being investigated by MI5 for involvement in UK terrorism are under 18”, a threefold increase in three years.

AI tools are being used to assist with moderation, but they struggle to interpret memes or when language is ambiguous or sarcastic.

3 months ago

70

3 months ago

70